Emergency response, turning raw data into actionable real time insight

After a wildfire ravages a home, how long does an insurance claim take? How many police, emergency crews and council teams have to comb through the rubble, take pictures and document the tragic events? Communities and insurance companies need anywhere between 6–9 months and thousands of experts and volunteers to get a precise overview of the impact.

At Unleash live, we asked ourselves, what if it could be done quicker with fewer resources?

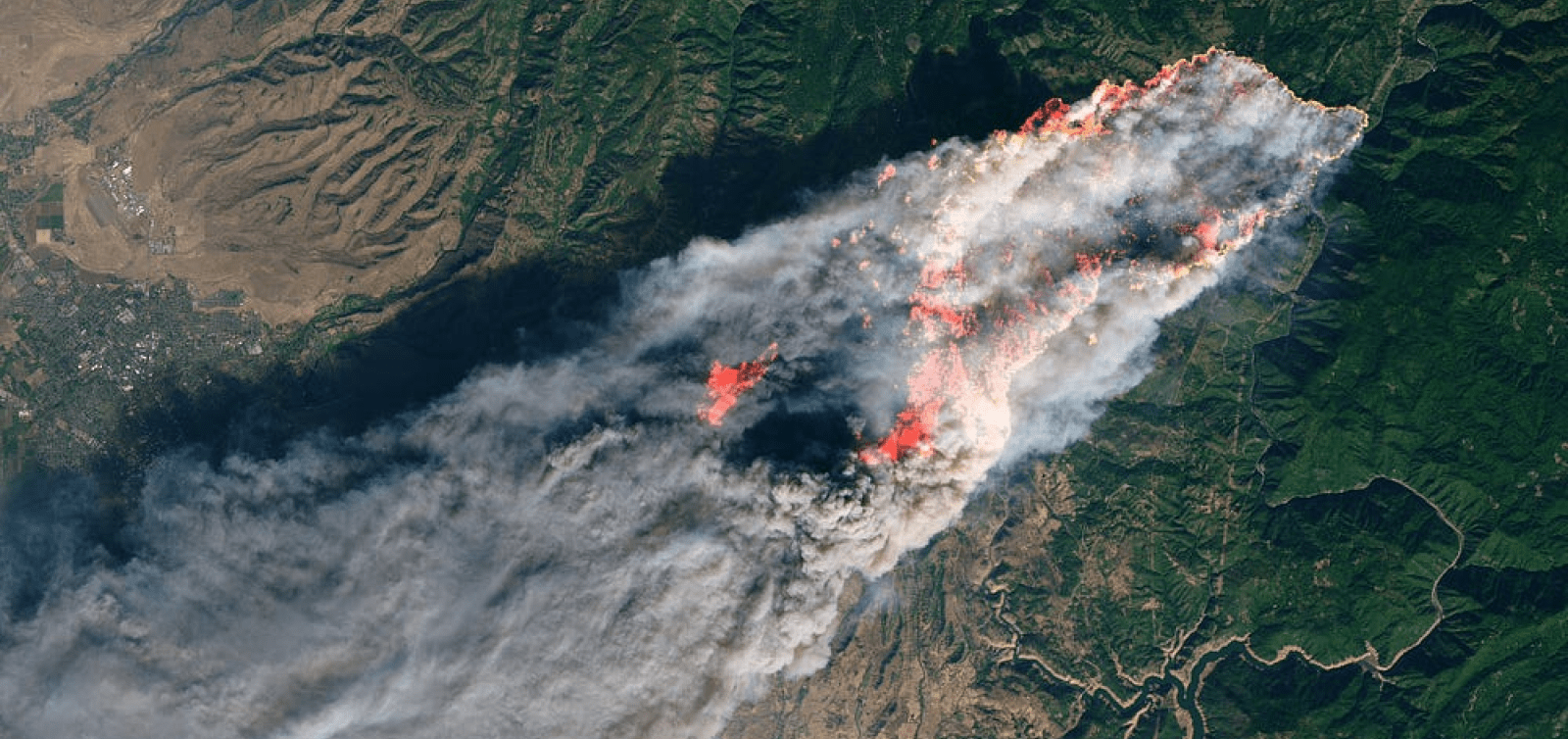

When wild fires passed through Northern California’s town of Paradise in early November, Unleash live wanted to help early responders to gain operational clarity fast to assist in recovery efforts and accelerate the process for homeowners.

Through the incredible work of Butte County together with DJI, Dronedeploy, Survae and Scholar Farms, the county assembled a high resolution dataset of the affected area.

With the Camp dataset, Unleash live analysed 17,000 acres of high resolution imagery of Paradise providing valuable insight such as the count, gps location and square footage of the affected structures, the location and count of intact structures and count of vehicles damaged.

Unleash live achieved this outcome in near to real time. Drones flying, data ingesting through WiFi/4G network or satellite (with post flight upload as a fallback option), AI video analytics were applied in flight and actionable insights were alerted fast on ground mobiles and tablets.

Unleash live Fire Damage A.I. — Camp Fire, Paradise, CA

With Unleash live’s ability to integrate other GIS and sensor data we were quickly able to extend the insights generated even further.

How We Did It

Training a high performing custom AI for damage detection

Through our partnership with DJI Enterprise and Emergency Services, we trained a custom AI algorithm, which identifies property affected by fire in live geo-referenced video feeds or geo-referenced images.

To build the AI, various police and emergency services uploaded high resolution datasets to Unleash live in early 2018. With our AI cloud server infrastructure, powered by high performance Nvidia GPUs and AWS, we were able to train a neural network and deploy it to our AI sandbox within a few weeks.

Early tests with emergency crews on the ground, streaming live video back to Unleash live, proved accuracy of more than 80% of inference. We then also tested the AI on fire footage from other areas, such as the Santa Rosa fires and the fires around Athens in Greece. These early tests were very encouraging for the team. The AI could be extended across any future large scale fire.

Extracting insights from the Camp Fire dataset

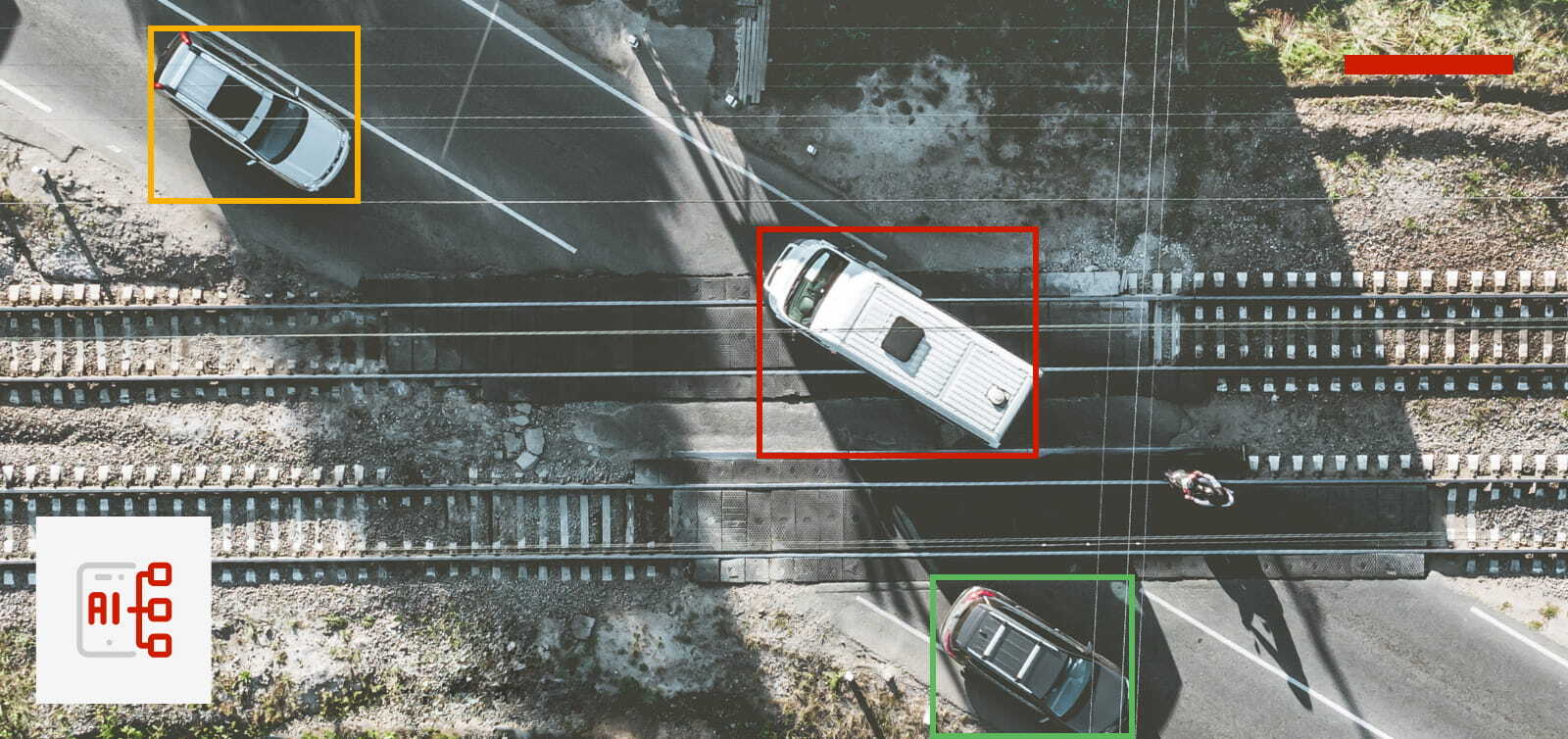

Once we had access to the Camp fire high resolution imagery shot by DJI drones, we selected several large test areas and deployed our AI algorithm.

We ran the AI against various subset locations to assess the accuracy. Below is an example of our first pass output.

Within minutes the Unleash live Fusion Atlas presents the output. The affected homes are marked with pins on a layered map in any browser or tablet. This gives operational clarity to responders as well as utilities, councils and families.

Others may want to obtain a visual comparison of before and after, as well as AI overlays. All can be done through our difference detection tools.

Learnings and Next Steps

- Like any other AI, this AI still returns false positives. Sometimes scrub or burnt trees are incorrectly identified as burnt structures. State of the art AI models for detection in complex scenery, typically have an accuracy of 80%-90%. So at least one in ten objects will be identified incorrectly, which we need to accept.

- AI helps with large and dispersed quantity of raw data, it is not an engineering tool. It excels with getting a grasp of a complex situation fast, to focus the human on the most important aspects and remove the hazardous, boring and routine elements.

- AI will never replace a human fully. This AI was trained for only 2 weeks from different heights and lighting conditions on the Carr fire and then transferred to Camp Fire. It holds up very well considering and guarantees above 80% inference.

- Aerial data is different from street level data. Unleash live ingests footage from cameras on the ground as well as from drones and satellites. This provides developers and enterprises the flexibility to customise their AI models to their specific need. Identifying a burnt car at street level requires a different data set vs recognising the car from an aerial or satellite perspective.

At Unleash live, together with our partners, we are now working to overcome these challenges with our unique video AI analytics capability and further refinement of our AI development work. In the case of video, not every single frame needs to id the object. A good fix within say every 1 second is sufficient to push the overall inference accuracy. All this will help extend our AI analysis to many further enterprise use cases.

The work to help emergency services and councils worldwide is extremely exciting for our team, we invite everyone to contribute to make this capability even more valuable. Tell us what you would do to help better decision making in vast geographically dispersed areas.

Get Started with Unleash live

Get in touch with one of our specialist team members to learn how your business can take advantage of live streaming video and AI analytics today.