How True Aerial Autonomy will Amplify Asset Inspections

Have you ever attempted to dodge a bullet? We will show you how and why it is relevant for your infrastructure asset management.

What does ‘drone autonomy’ really mean? How can asset operators utilize these capabilities operationally and technically? We explore the definition of autonomy and how to start deploying this today to deliver value, where autonomy needs to be in the future, and how Unleash live leverages these applications.

The world has not yet arrived at autonomy, but we’re on the path of moving exponentially faster toward this end goal. This accelerating progress creates significant opportunities for how individuals work, businesses, the economy, and society. A.I. is a key ingredient to progress toward autonomy. An analysis of several hundred AI use cases across various industries found that the most advanced deep learning techniques deploying artificial neural networks could account for as much as $3.5 trillion to $5.8 trillion in annual value, or 40% of the value created by all analytics techniques.

What is true autonomy?

There are different stages on the journey towards autonomy. Considering the Unmanned Aerial Vehicle (UAV) market - often called the ‘drone’ market - the demand and growth create rich opportunities for running operations across our vast infrastructure more efficiently. Currently, almost every single drone mission in the field is manual. The industry is keen to progress to EVLOS and BVLOS, the ability to remotely fly drones through a control center or just beyond the visual line of sight in the field. Our infrastructure clients and the hardware industry aim for this to become the norm. Essentially, humans can be removed from the often time-consuming and tiring in-field control of the hardware and rather focus on working collaboratively with subject matter experts in real-time to determine the next course of action while the UAV is completing the task autonomously.

Industry workflows are evolving towards automated BVLOS operations

For us at Unleash live, several ingredients need to come together to achieve this true autonomy:

-

Reliable connectivity and processing. We cannot rely on any network that may drop out, even if it's for only 0.1% of the time. Ultimately, we need to find new forms of connectivity with built-in redundancies. Current networks and processing induce latency; for true autonomy, this paradigm needs to be overcome

-

Smarter navigation. Drones have amazing onboard sense and avoid capabilities already. They may use GPS/GNSS receivers, inertial navigation systems (INS), LiDAR scanners, ultrasonic sensors, and visual cameras to navigate autonomously. But more needs to be done, and there is much room for improvement to cater to all imaginable scenarios.

-

Air space aware. ADS-B, UTM, point-to-point, and drone-to-drone discussions are to increase awareness points in the right direction, but scaled implementation and standards are still lacking.

-

Many-to-many. We need to break the paradigm of having one pilot to one drone, but rather thinking about robust swarms, with many more people and machines involved in navigation, viewing flight data, collaborating, and acting in real-time, and with predictive algorithms.

-

Enhanced telemetry. Not only taking the drone data and IoT sensors but also high definition real-time and predictive weather data and surrounding terrain and 3D data of whatever is relevant for the mission.

-

Custom analytics. You cannot just have standard photogrammetry but a much more custom-based machine learning visual machine learning and data analytics capability for the specific mission and use case you want to deliver.

-

All of the above needs to happen in real-time and in high definition. When we say real-time, we mean zero latency, all the time, every time. Data processing needs to happen instantly, and high definition means that we cannot rely on video or image streams in 720p or lower. We need high-performance visual analytics to provide the insight that our customers and the drone require.

Varying requirements to suit your ‘scenario’

Let’s illustrate how autonomy plays out in three scenarios, illustrated below. Think of how you would survive each environment as a drone or robot. What does your brain process? What do your eyes see? What senses process to survive in that moment?

Scenario 1 & 2: Endor and Naboo from Star Wars, Scenario 3: Dodging bullets in The Matrix

Welcome to Endor: Navigating through Scenario 1’s hostile environment differs greatly from Naboo’s Scenario 2's underwater environment. Fast, secure, and efficient navigation to survive is your mission - you cannot run away. Different sensors and capabilities are needed with various AI and machine learning algorithms informing decisions you must make in real-time, depending on the environment and mission. In Scenario 3, your sensors process 480 frames per second, and you need to instruct your limbs to make the right sense and avoid actions. Not an easy task for your Nvidia GPU chip that processes this movement to dodge the bullets. Find out more about how they created this scene back in 1999.

While you are building your autonomous robot, check out a variety of further scenarios within the Star Wars databank.

As the scenarios show, it can all be quite complicated in reality. Therefore, at Unleash live, we work with our industry partners to bring these variables together.

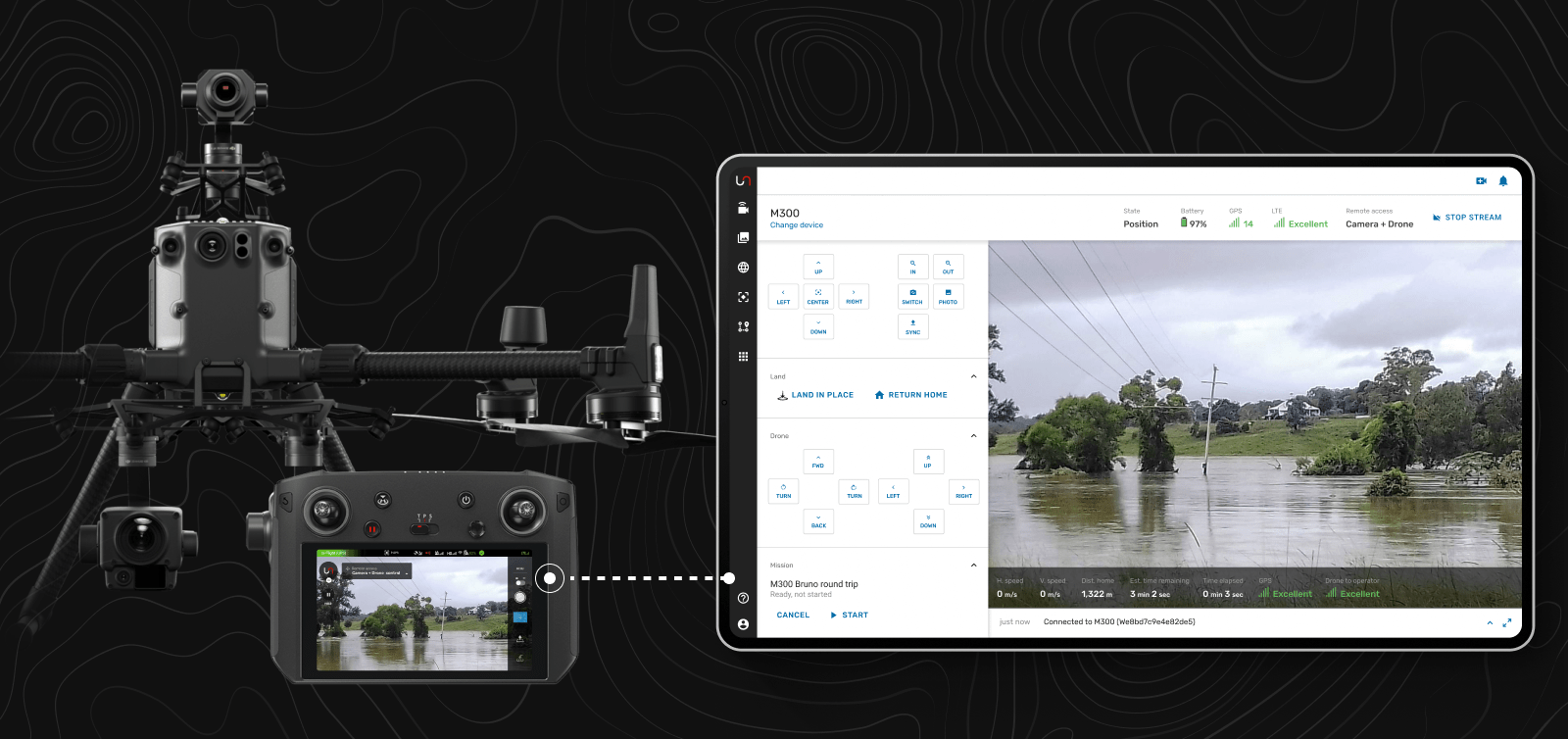

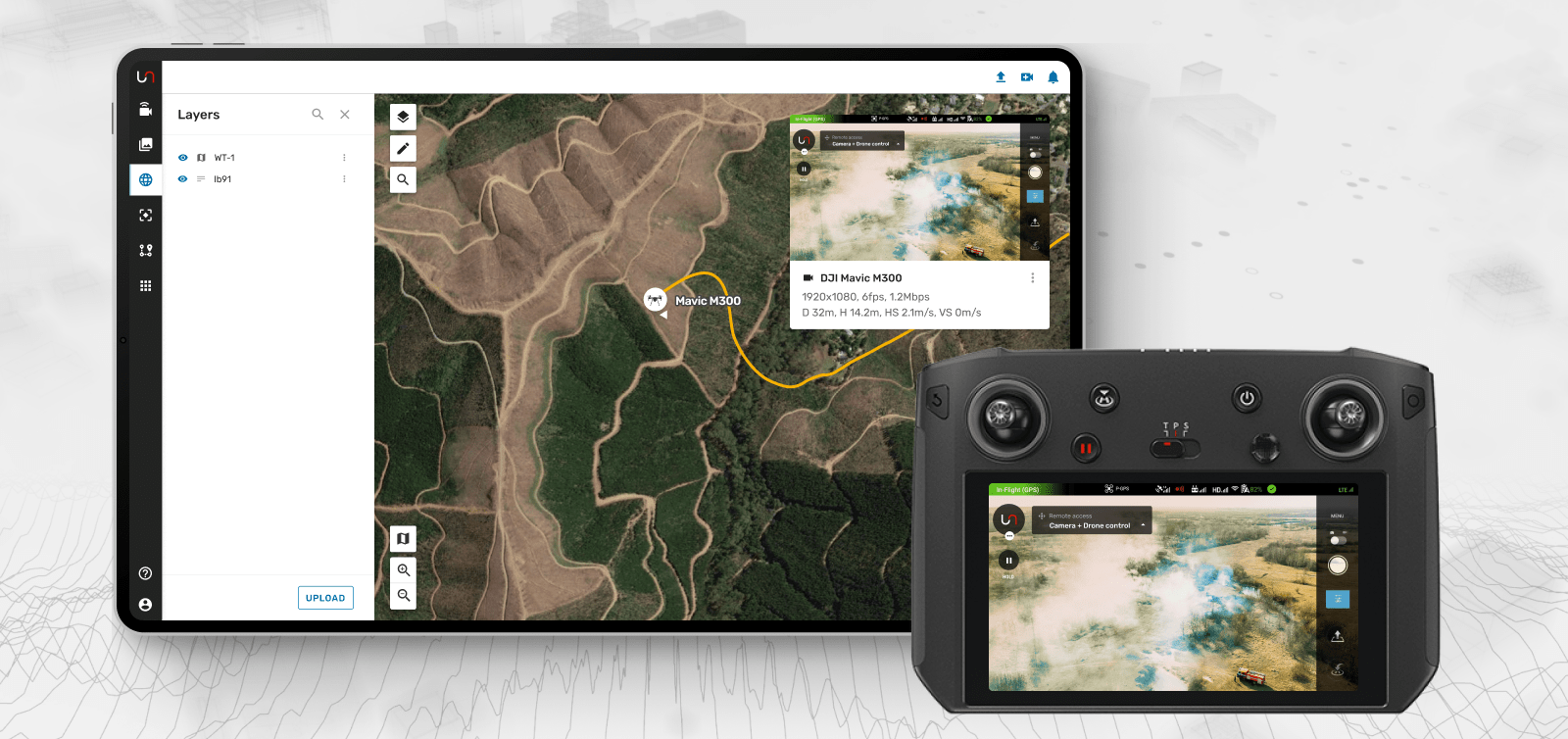

Unleash live’s Autofly is a robotics control platform for remote drone operations, analyzing visual data with AI apps in real-time. We believe that the sensor, payload, and the drone operating together with a networked globally scalable platform all have a part to play in providing the direction for autonomy.

Enabling end-to-end automated workflows with Autofly and Unleash live

The benefit of the Autofly app is that it is adaptable to different environments and hardware. For example, it is compatible with DJI, ArduPilot, and PX4 and provides flexibility for our customers for whatever scenario they may be in. Autofly is always connected to our scalable cloud platform for live streaming data and video, smart navigation, syncing media, analytics, and geospatial sense. And finally, the A.I. is constantly improving and can be adapted to deliver the insights that our customers need.

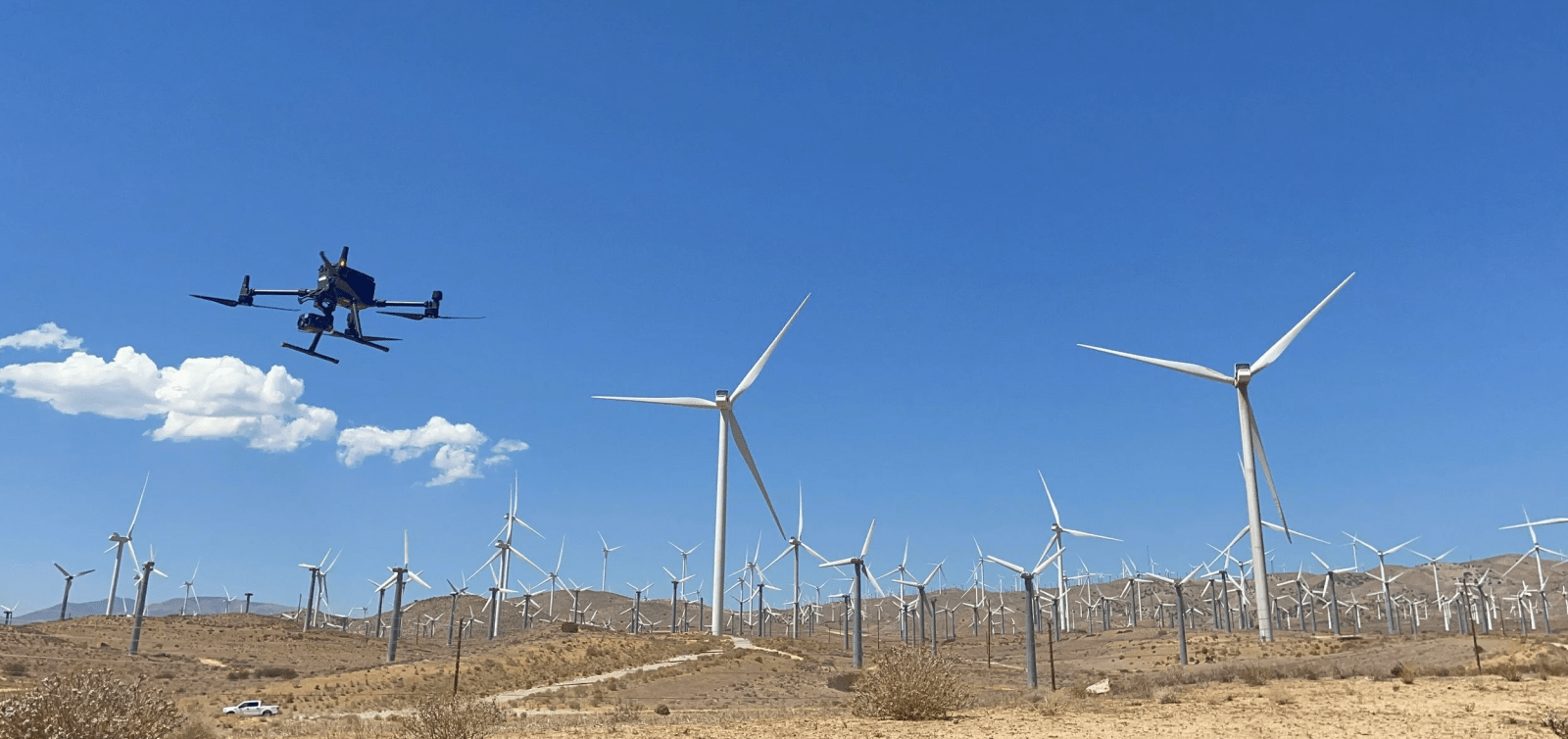

Autofly allows for autonomously delivered missions to gather data while streaming it straight into the cloud, even from thousands of miles away, in real time, analyzing the data that flows back to the customers’ remote command centers. Each mission is dynamic and not static, which is a big shift in how we deliver those missions to our clients. Such as the above scenarios where you want to survive or dodge something, in our world the scenarios we deal with are high definition and highly precise inspections for avoiding disasters and maintenance scenarios for wind, solar, oil & gas and transport at scale.

Let's bring this to life with a case study or real-life scenario: Endeavour Energy has been determined to be the best-performing electricity network in Australia. They service over 2.5 million people living and working across Greater Sydney. Our client's mission is to quickly, securely, and precisely inspect their 430,000 power poles to produce quality and constant insights into the asset condition and degradation over time.

We capture the same image every time, then apply high-performance A.I. to the visual data to identify faults and damage to the assets and do trend analysis across time to manage the entire network. Reports are delivered straight to the maintenance and inspection teams thus lowering costs and improving the safety of maintenance

All of this is integrated into their workflow management system so that they can move towards predictive maintenance and optimize their maintenance field fleet. The solution is fast, scalable, and low cost, and it increases their safety records and service levels as they reduce time spent on manual inspections by more than 50%.

Conclusion

The journey towards automation is moving forward at an exponential rate. The world has not yet arrived at true autonomy, but we’re on the path and quickly moving towards this end goal with products such as Autofly connected to our highly scalable Unleash live media management and analytics platform leading the way in autonomous inspections of assets. Learn more about what we can achieve for you by getting in touch.

This article is based on Unleash live's CEO, Hanno Blankenstein, presentation at the Commercial UAV Expo in Las Vegas, USA. He shared the stage with Aerial Evolution Association Canada’s Michael Cohen, Skydio’s Brendan Groves, Ai6 Systems’ Alexander Fraess-Ehrfeld, and Ashish Kapoor from Microsoft to discuss 'Drone Autonomy.'